Something's Wrong with Rotten Tomatoes

...and 'The Marvels' was positively review bombed.

‘The Marvels’ Was Positively Review Bombed

I’m rather confident in saying there are far too many ‘5’ star reviews for ‘The Marvels’ (2023). 41.38% to 37.59% of all reviews, depending on snapshot, were ‘5’ stars. A snapshot is a day all reviews were scraped. That’s explained later, if anyone makes it that far.

I would be more open to believing that number was legitimate if the ‘4.5’ reviews weren’t skewed so low. All the reviews follow a trend apart from 4.5. I would expend this number to be around 27% on snapshot 1 and 23% on snapshot 5?

9% does not make sense to me. Why would almost half the people who gave it 4 stars give it 4.5 stars, when more than double the amount of people gave it 5 stars than 4 stars?

For a film that’s lost hundreds of millions of dollars, that no one is going to see, which has been pulled from the cinemas barely a month after release, that screams positive review bombing.

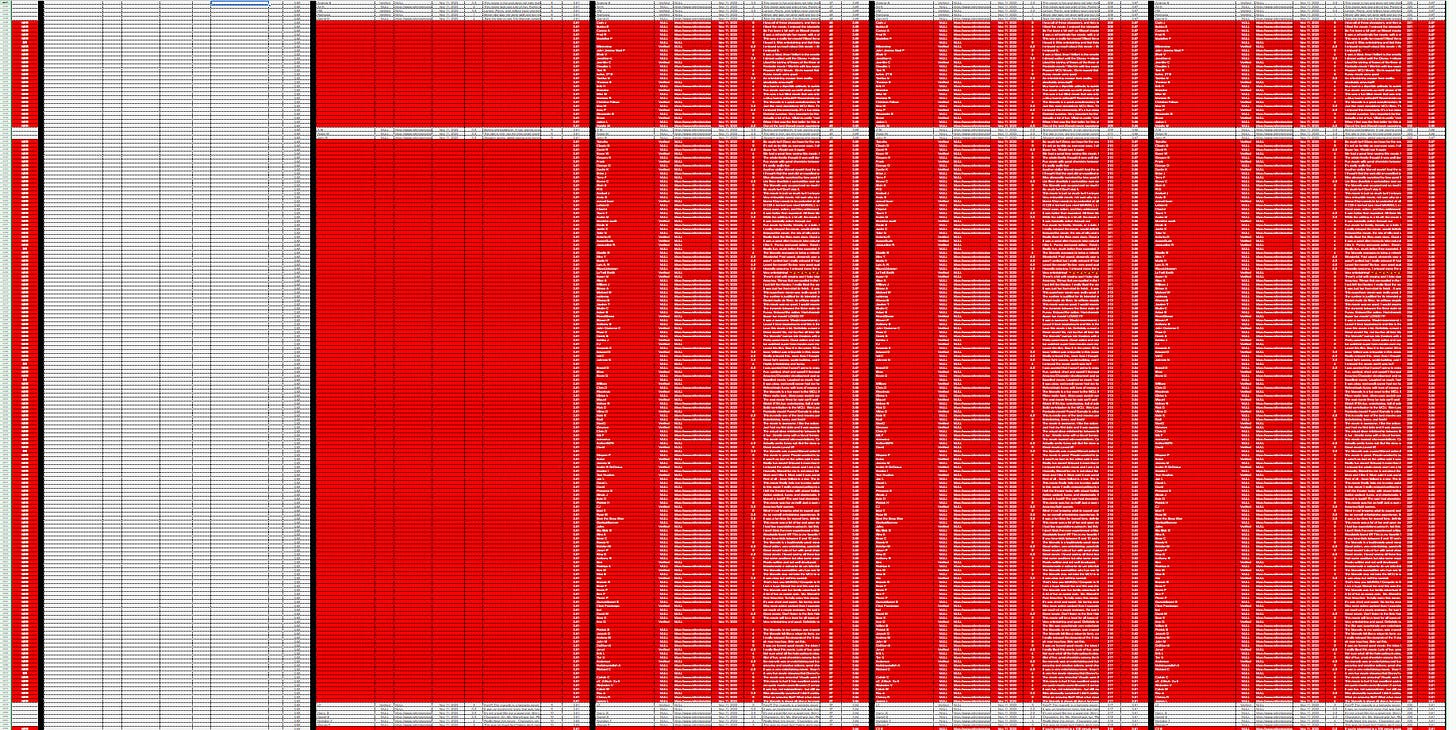

Below is evidence from the ‘charts-2’ tab of my master workbook:

Was it negatively review bombed as well though? It’s possible. However, there is an expected trend between ‘1.5’ to ‘1’ to ‘0.5’. it’s almost doubling each time. There’s not a ludicrous jump. A review bomb would be a significantly large number of high or low reviews. That’s only happening for ‘5’ star reviews. Quite conclusive.

Given how many real movie fans know these websites are compromised, it’s likely they don’t even bother to review bomb it. So who’s doing it? Come on, we all know!

Now that sensationalist clickbait claim is out of the way, I’ll explain everything in more muddled detail. There’s a video linked further down which may help explain better.

Don’t Trust Review Sites

IMDB is owned by Amazon. Over the years there have been reports of rating manipulation by the company, or interested parties, to the point where Amazon entered disaster recovery mode for their ‘The Rings of Power’ series.

The show was so bad that Amazon flat out hid all reviews. Uninformed people wouldn’t know how terrible it is, watch, turn off within minutes but be unable to see negative ratings. Why is that bad?

You can’t take back what you’ve already seen. More people would watch the series to see how bad it is, Amazon could boast large viewing figures and lie that people enjoyed it.

Ignore the fact everyone hated the show. The reviews and ratings were hidden so it’s not a problem. More people would watch, even for a short time. That’s a good thing according to Amazon.

Unfortunately, there are more review sites than IMDB and Amazon. The public made it very clear how crap the show was.

Metacritic was a historically more acceptable metric for ratings than Rotten Tomatoes. I would guess this is because the name sounds professional and it’s far easier to manipulate? Companies like being able to tweak scores. How would I know? I used to be a semi-professional review manipulator.

Many game studios have been graced by my presence. For a large one, very large one, I would submit many fake positive ratings on Metacritic. Could say ‘we’ but I’ll take the hit. Neither the company, nor anyone involved with them, ever asked me to post reviews. I acted completely independent.

That makes me the problem, right? Imagine working flat out for 2 years on a game. You work evenings, weekends, don’t take holidays, all to make a product that someone might enjoy playing. Then day 1 incel reviews flood the Internet with dumb shit like:

“I ahve never played the game but I do not like the trailers is bad and not what I like.. try harder.” - 0/10

Yeah, I’ve read enough of those to accurately replicate the language pattern. Don’t like it, don’t buy it. You can’t leave a review for something you’ve not experienced. Well you can on the Internet.

Some people had bonuses and project agreements based on ratings, not sales. This doesn’t pertain to me. I was paid shit money and moved around projects regardless of how bad or well a title performed. But I deemed it unacceptable that the jobs of 75 to 350 other people were at risk due to to incels. I did my part to fix the problem and don’t regret anything.

Rotten Tomatoes is now ‘the current thing’. For some reason, people trust it. Although that’s fading fast. That’s not surprising when even I’ve noticed seemingly random oddities with some reviews!

What I’ve Seen

There’s no implication of any wrongdoing here. I’ll flat out say it! No, I’m not convinced of any ill-intention behind what I’ve uncovered. It doesn’t make complete sense if intentional.

Reviews seem to be appearing and disappearing from the Rotten Tomatoes site. People shouldn’t be ostracised for instantly thinking shenanigans. After all, this happens a lot. But the anomalies make almost zero impact on the average score. At worst, the difference could lead to an average rating change of 0.1. Would you see a movie because it was rated 3.7 instead of 3.6? I wouldn’t care.

Then there are times when the average rating drops. Why would ratings be manipulated in to the negative? Unless everyone working there is an idiot? A definite possibility.

It could be the system can’t process all the reviews being submitted? There might be a backlog to work through? Then why are these oddities happening out of order? If the system was lagging it should have issues processing recent reviews, not random historical ones.

Either someone is messing with the system or it’s badly made. For a platform which creative industries and fake news use to gauge a projects success, this is very suspicious.

Thinking caps on.

What I Did

First, I needed a movie. ‘The Marvels’ released on 10/11/2023 and was obviously going to generate some controversy. The usual shills and clickbait channels were running 24/7 gay-ops, citing “I hate the film but it has positive reviews, it’s being review bombed” and/or “I like the film but it has negative reviews, it’s being review bombed”.

Where’s your evidence?

Today is 08/12/2023. Nearly a month later. Yeah, I have a real job and need to fit my ramblings around that. I took 5 snapshots of reviews for this movie over different days:

Snapshot 1: 10/11/2023

In the evening so people had plenty of time to submit early reviews

There were 1,630 reviews!

Snapshot 2: 11/11/2023

1 day after release, 1 day from previous run

Snapshot 3: 12/11/2023

2 days after release, 1 day from previous run

Snapshot 4: 21/11/2023

11 days after release, 9 days from previous run

Snapshot 5: 24/11/2023

14 days after initial release, 3 days from previous run

This was run twice to prove my script is working to 100% accuracy

The reviews were compiled in to a single Excel worksheet and formatted for (my) readability.

My reasoning behind capturing reviews over different days was to confirm consistency. Reviews should carry from day to day, unless they were deleted or edited. Users seem to be able to perform those actions. Does it happen a lot? Someone on line 5293 changed their review from:

“Lots of fun! Great to see these characters!”

to:

“Lots of fun! It's great to see these characters and their stories continue!”

Why would anyone bother? Is that a bot trying to look real?

My idea was to track patterns in review scores, not reviews. I was hoping there might be easily identifiable peaks with ratings at specific times. That would hint at bot activity. What I did'n’t expect was entire reviews to... be weird. So I concentrated on reviews and somewhat ignores ratings.

The final snapshot, 2 weeks after ‘The Marvels’ released, ended with 6,747 reviews.

How I Did It?

Web automation. I enter the Rotten Tomatoes movie review URL and a Ruby script pulls out review details, on a per page basis. Although since running this script the website has changed design to no longer use page switching. Would have been a lot simpler for me to scrape if implemented sooner!

The site already sorts reviews by latest to oldest. So all I need to do is pull the data as it’s displayed and everything should be ordered correctly for Excel.

Data was logged in 3 ways:

JSON - old design but left it in

CSV - to easily import in to Excel

HTML - each page has it’s HTML dumped locally so I could investigate errors and confirm reviews logged in the JSON/CSV match what users see

I only care what users see, not anything going on behind the scenes or through an API

There was a slight hiccup with the day 1 snapshot CSV, but no data was lost. The script threw a tantrum upon reaching the last page, resulting in some duplicate results. I ran a duplicate filter in Excel and they were removed. Then confirmed the HTML matched the CSV. Which it did.

Considering the amount of data, I wasn’t eager to manually interrogate it for anomalies. So I wrote a few macros to highlight differences. The macro book won’t be publicly accessible because DON’T DOWNLOAD MACRO ENABLED WORKBOOKS. But feel free to download all the libraries you want from dll-driver-download-fix.whatever. I’m confident they’re not riddled with malware.

I’m unsure if Rotten Tomatoes has an API and I don’t care. I want the exact reviews users see. An API could be manipulated. After all, who’s going to check? It’s not like they would give anyone database access to confirm.

To reduce load on the website, I added a few courtesy delays in to the script. The largest number of pages scrapped for a snapshot was 338. I would hope that is nowhere near enough to bring down their website, but adding a delay is a nice thing to do. Snapshot 5 took the longest time to run at around 40 minutes. It’s automated, what do I care. Run it in the background and do something else.

True, it’s a live website and reviews could be added and removed while I’m scraping. 40 minutes is a long time with both incel rage down voters and incel rage up voters. I can filter the duplicates out in Excel, but later on I’ll cover why deleted review would be/are almost never a problem.

Here’s a video which may explain the process and data a little better:

Accessing the Data

If interested, I would recommend looking through the results for yourself. Something may jump out at you which I missed. I don’t have Excel so used Google Docs. Which means anyone can view the data without downloading it. Sometimes being cheap works out for the best.

Here are the results:

Use this for analysis

Snapshot 5 confirmation workbook

Ignore this unless specified

It’s to prove my script works

HTML and CSV evidence files (79MB)

The JSON was deleted because it’s not needed

…I’m retarded so deleted both the JSON and CSV for snapshot 3, but the html files are all present

The master workbook is all you need to look at.

The Master Workbook

Tabs are:

‘master’ - a compilation of all reviews, use this

Snapshot tabs have all reviews for that snapshot by date run:

‘10112023’, ‘11112023’, ‘12112023’, ‘21112023’, ‘24112023’

Charts:

‘charts-1’ - (useless) average ratings as each review entered the system

‘charts-2’ - basic counts for number of total star ratings per snapshot

The ‘master’ tab is a compilation of all 5 snapshots; ordered left to right, earliest to latest. Reviews are ordered descending, latest to oldest. Give it a scroll and it should make sense.

Lines are highlighted in red if suspicious. There’s also a ‘Suspicious’ column on the far left for filtering, which contains a flag if the review differs on at least one of the days based on the following:

S = Score changed (rating)

R = Review changed (common)

N = Name changed (infrequent)

These flags can be combined. ‘NSR’ is the most common flag. It almost always means a review has been removed, added or removed and added again. There are 502 of these entries out of 563 suspicious rows (89.1%).

Some of the these ‘NSR’ reviews were removed and appeared again later with identical content. Could this indicate false flagging?

Others disappeared then reappeared with slightly different content. Were they flagged, edited and approved? Or are there some shenanigans going on with a database admin or code?

A yellow line indicates the end of time! This means there were no more reviews before that row. Anything above it, in the same column, won’t appear red if there’s an issue on subsequent days.

Let’s look at some reviews and possible issues…

[Line 6306] 5 stars

“Good, not great. Fun action, lots of laughs, and great action scenes. I think Marvel movies get hate for just being average. This movie is average and I really liked it.”If it’s only ‘good, not great’ and ‘average’, why give it a 5 star score? A better question may be why did this review appear out of nowhere on the 12/11/2023. It should have been visible on 10/11/2023 and 11/11/2023 due to it's position in the table.

My 10/11/2023 scrape was in the evening. Possibly this review was submitted earlier, flagged for review and it took 2 days to review? Doesn’t sound likely. Unless the person who submitted the review had to update it? If they thought it important enough to scramble a review on day 1, why wouldn’t they update it on day 1?

[Line 5912] 5 stars

“Great fun Marvel movie.”This review disappeared after the 11/11/2023 snapshot and never appeared again. Why? It’s not offensive. Why remove a 5 star review? Is it a bot? How do you know? Is it an injected review to make the movie look good, then removed after enough legitimate 5 star reviews have been submitted?

Did the person change their mind, decide it wasn’t a 5 star review but delete it all instead of rating it again?

…that’s 2 out of 372. I do not see a pattern with review scores or anomaly times.

That big block of red from the last screenshot I can not explain at all. It’s not a one off occurrence either, it happens multiple times; large numbers of review appearing in the system. This is what led me to believe it could be an import from a third party integrator, like Facebook, bulk inserting at a specific time of the day?

If it were a third party, why are the reviews all grouped? That still implies they were all created within quick succession. Quick enough that no other Rotten Tomato reviews could be generated between them? That doesn’t happen in the real world.

Perhaps there was a system outage and the recovery process was to back date reviews? I can’t convince myself of that so I don’t know how I can sway anyone else.

These are the kind of oddities I’m seeing and can’t explain. Perhaps multiple processes are taking place at the same time, throwing me off?

The only consistency I could find was with the issues per snapshot:

Snapshots 1 to 5 had 372 total issues

6,765 total reviews

Snapshots 4 to 5 had 4 total issues

3,267 total reviews

This isn’t totally unexpected because snapshots 1, 2 and 3 would be missing almost half the reviews that are present in snapshots 4 and 5; 3267 / 6765 * 100 = 48.3%

The more columns there are to compare, the higher a chance of differentiating data. However snapshots 1, 2 and 3 are over a 3 day period. Snapshots 4 and 5 were taken over a 4 day period. So I would still expect a hell of a lot more discrepancies between snapshots 4 and 5 than 4.

Perhaps whatever causes this only happens during the first few days when a movie goes live? Performance issues? Doubtful, both dataset groups contain over 3,000 reviews each (1 to 3 and 4 to 5). Perhaps entities messing with the system stopped after the first 3 days?

A 9 day gap exists between data sets 3 (12/11/2023) and 4 (21/11/2023). Could that… something, something danger zone?

My Script Works

There are no problems with it. Other than the massive delay and poorly formatted code. It’s not poorly formatted, there are just so many non-programmers in programming jobs these days who can’t read actual code.

There is a very rare situation where a review could be skipped over. The website is live so reviews could appear and disappear while being scraped. Reminder; the website displays reviews from latest to oldest.

A new review wouldn’t cause an issue. This review would appear on page 1 and push all reviews further down. If page 10 were being scraped and a review appeared on page 1, this would push the last review of page 10 on to page 11. When I scrape page 11, the same review would be picked up again.

All I do is run a duplicate check in Excel, note which lines were removed and ensure the HTML is correct. Fixed.

A deleted review is more troublesome as every other review would shift position back by 1. If page 10 were being scraped while a review on page 1 was deleted, the first review on page 11 would now be at the end of page 10. There’s no way to identify this as page 10 has already loaded. The review is lost and flagged by my macro workbook.

I guess a solution could be to refresh the page, checking reviews are identical before and after refreshing? There is still a chance of it occurring when transitioning to the next page though. Why bother then?

Both these scenarios presume a review is added or deleted on a page that has already been scrapped. However, the added delays do increase scraping time and make the scenario more likely to occur. Going to have to live with it.

A skipped review is identifiable using the following criteria:

The review is highlighted as not present on 1 snapshot, but appears on all others

The review is the very first/last flagged on a page (dependent upon day)

A deletion which caused a skipped review, can never happen in the middle of a page. That scenario could only happen if…

The user deleted their review

The comment was flagged and automatically removed for review

Does the system attempt to find bot reviews too?

The system is badly coded and is skipping some entries in the database

Then why aren’t the anomalous results random?

Third party vendors are adding or removing reviews, but out of sync

Something like Facebook reviews being imported in batches at certain times of the day?

Rotten Tomatoes are doing… something

If further proof is needed that the script works, look at Snapshot 5 confirmation workbook.

The snapshot 5 workbook contains results from 2 snapshot passes, run 1 after the other. If the script were broken then, considering there are over 6,000 reviews, the sheets would differ. The HTML dumps are in the master dump zip archive.

Both snapshots were taken late in the evening on 24/11/2023. The first run is on the left, second on the right. Differences are highlighted in red. There is only 1 issue and it’s the first review.

These runs took around 40 minutes each. That means within 40 minutes of me starting the snapshot, the first review was removed from the system as it doesn’t appear on the right. Why? There’s no way to know.

It just so happened to be a 0.5 review too. What are the odds! 1/10 actually.

This is why I logged the account URL’s, when present. Said account has a URL which, within a few clicks, reveals the review to be present today. Exactly the same review. Nothing has changed. So why was it removed so quickly then put back up?

Are bad reviews immediately being flagged to make the movie appear better than what it is? Is ‘someone’ running gay-ops to automatically flag low reviews to make their a movie appear more liked?

What Could be Happening

I would like to declare victory over Rotten Tomatoes for discovering manipulation in their public facing system, but I don’t think there is any. At least I can’t figure a reason why anyone would do it?

The differences in average review scores are negligible. If we pretend there is intentional manipulation, the average score only differs by around 0.1. That’s nothing. Would you go see a movie purely because it was rated 3.7 instead of 3.6? Converting that to a 100 rating results in 74 (3.7) to 72 (3.6). This is all assuming the rating goes up, not down.

If anyone wanted to massively manipulate the average score, a thousand 0.5 or 5 star reviews would need to be injected. That would be easily identifiable and it’s not happening. Perhaps the end goal is to keep this horrifically bad movie weighted average by mixing in average to high reviews?

The data doesn’t lie though. Reviews are being inserted and removed from the system.

It could be due to:

Users are removing their own reviews

Users editing their own reviews

Do these get removed and need approval before going live again?

Gay-ops flagging reviews which, I assume, immediately removes them from the site?

Not necessarily the general public!

If legitimate, users may take a few days to update their reviews

Historical reviews being ingested from third parties, which means they would appear to be inserted at random

Entities flooding the system with high rated reviews, not to manipulate the average score but so users reading reviews see these high ratings first?

I’m not ruling out corporate greed though. Rotten Tomatoes needs advertisers and sponsors. If the site has pages upon pages of reviews calling ‘The Marvels’ hot garbage, why would the studio pay for advertising space promoting the movie next to a bad review?

So perhaps there is a nefarious entity adding mid and positive reviews? Not to increase the average score, but so users see more positive comments when browsing new ‘reviews’. Is that important? Especially when the movie is a flop and no one is going to see it.

Reviews are meaningless if people know the system is compromised. They learn this through opinions of friends, family and trusted sources. Hence why ‘The Marvels’ is a catastrophic failure despite user reviews of around 3.6/5 (72/100).

How can a movie, that’s over a 70% positive rating, lose hundreds of millions of dollars? Because the real rating is nowhere near 70%. These ‘review’ sites are now trapped in the same socially engineered bubbles as the likes of Twitter and Facebook; populated by a delusional minority who believe they’re the majority.

Good luck explaining that to furious investors and the inevitable bankruptcy judge.

So what’s going on with Rotten Tomatoes? Is it a badly designed system or is it being manipulated?

For the Record

To anyone who is still under pignosis; STOP VISITING THESE SITES!

All their content is fake news. Whether it be intentional or due to public manipulation. Watch trailers, investigate and decide for yourself. Don’t listen to other people.

Even me! Read through the data yourself and draw your own conclusions. Write scripts and check the live data. It’s easy enough.

Before my brain implodes, there’s one last thing to point out…

A COMMENT IS NOT A REVIEW !!!

Here’s a randomly picked selection of COMMENTS from an ocean of shit ‘reviews’:

[5] “Ending was awsome!!! must see...”

[4] “This was a lot of fun. Everyone needs to calm down a bit on their overreactions.”

[5] “The movie was very entertaining and set up a lot for the future of Marvel not all will like it but I found it very good and recommend it for others”

[1.5] “Needs a better timing script”

[5] “movie was great! highly watch ahain”

[0.5] “A $2m movie made for $250m”

[1] “The movie was really bad. What a waste of time”

[5] “It was a fun movie to watch.”