Chatbots and Predictive Imaging Are Not ‘AI’

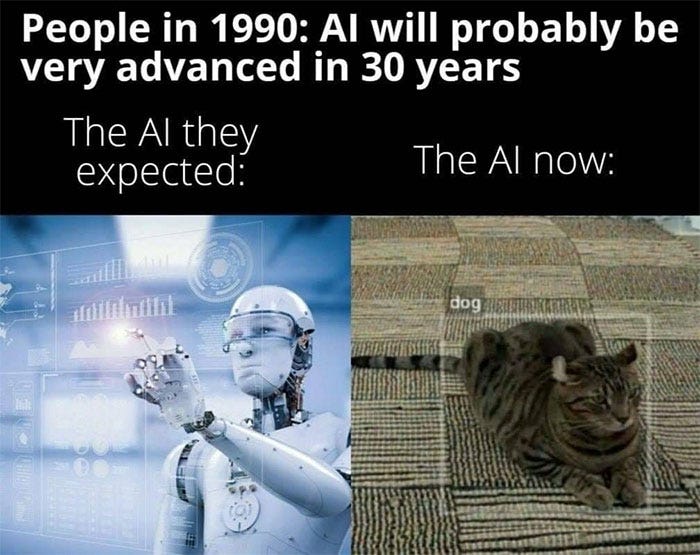

What’s being peddled as ‘AI’ is ‘machine learning’. Very different concepts. Feel free to stop reading.

It’s been hard to escape the endless barrage of government sponsored ‘AI’ propaganda over the last few years. I’ve done my best to educate the sheep and quell all-out hysteria. Honestly, I’m tired of explaining this to morons who regard themselves as geniuses yet fail to comprehend simple logic gates.

I’ll be concentrating on chatbots as they’re the most insidious of ‘AI’ proapganda. By creating a scripted response system, nefarious entities can push an agenda while claiming to be innocent. It’s not their beliefs, it’s the ‘unbiased’ ‘AI’.

Video and image generation ‘AI’ is becoming increasingly popular due to the comical outputs produced. Hopefully, these systems are far simpler to dismiss without much explanation. Humans create images and feed them in to a system. Content is analysed, equations are computed, images are spliced, areas are blurred and an ‘AI’ image is generated.

There’s no intelligence. The machine has no idea what an image is or how the final output should look. It lacks conscious which is required for understanding. Outputs consist of multiple instances of human generated content, badly, merged together with human controlled functions. The only intelligence here is from human contributions.

Military ‘AI’ is terrifying. In the amount of money it generates and number of people who are ignorant enough to believe it can exist. Drones are the current thing and we’re meant to believe they have the ability to choose their own targets. Which is criminally misleading.

Let’s consider a guided missile, which is called a drone these days because branding matters! A human sets a target for the drone with specific parameters that it can only follow. Not ‘has to’ follow, there is no choice, it can only follow. The target is a portable anti-air battery. How does this drone ‘pick’ the target? With a combination of GPS and imaging.

A portable AA system is slow to move around, limited by factors such as environmental and weather conditions. The drone is fed the last position of this AA unit, from which it can compute a possible target area based on the movements of both objects. Image data of the AA is fed in to the drone, which it scans for with various mounted sensors. If an object matches colour and dimensions within a given threshold, that’s the target. Or close enough. The potential target could also be monitored for radio signals or a unique heat signature.

My point being, you’ll read this a lot, is there’s no intelligence. It’s sensor data validated against specific parameters. If you don’t believe me, you can do it yourself with Resemble.js.

Chances are your car has a MAF sensor which monitors airflow to the engine. If the flow is outside of a specified range, a signal is sent to the ECU which stops the engine. No different than a drone acquiring targets. So our cars have had ‘AI’ for decades? Of course not.

With ‘AI’ controlled weapons, governments can murder as many civilians as they want and shirk responsibility. No need for a counter-attack or reparations, it was the ‘AI’ who attacked. This is why it’s so important the public buy in to the narrative.

‘AI’ is nothing but fear porn. What’s being proclaimed as something that will take all our jobs and eradicate humanity, is nothing more than weighted, scripted responses from multiple linked databases. Or simpler. Something we’ve had since, not being hyperbolic, the 1970’s. If this is ‘AI’, then it’s been around for 50 years and is clearly not a threat to anyone or anything.

AI has not been around for over 5 decades because a pre-programmed response is not intelligence. That’s a dumb chatbot. People may want artificial intelligence to exist, that doesn’t make it so.

For the rest of this article I’ll be referring to ‘chatbot AI’ as simply ‘AI’. I don’t understand how anyone could consider image generation and detection ‘AI’. The term has lost all meaning. However, the gold standard for AI has always been; can a chatbot convince the human user that it’s sentient?

I guess that depends on the intelligence of the human using it!

The Master Plan

The motive behind ‘AI’ chatbots should be obvious to all; social engineering. Stunning and brave.

Someone, somewhere, has the goal of generating a single, 1-sided, point of ‘truth’ that can’t be questioned. Well that’s Wikipedia, right? Except people have finally woken up to the fact that almost all their content is laughable misinformation.

Wikipedia started out well intentioned; create a website where anyone can contribute information and be vetted by the public. Once those in power realised people were forming their own opinions, contradicting the narrative, the platform was weaponised and its content became worthless propaganda.

Even the normies are starting to understand this, as their favourite pages are systematically rewritten or removed entirely. Wikipedia is dying. Good riddance. However, the government need another propaganda outlet.

What if there were a supercomputer which scraped the Internet for all information, ingested all works of literature, scanned every image, watched every movie, heard every song and could instantly provide the answers to all your questions?

Such a system could not be questioned as it is the source of truth. In the mind of a single person, that may make sense, but what if the truth contradicts itself?

2 books are scanned with opposing viewpoints. Equally researched with counterpoints and written by celebrated authors. Only 1 result may be returned. Which does the computer choose? That decision requires intelligence.

What is intelligence? The first result ingested by the system? Is it a random number generator? ‘Random’ is the closest to intelligent a computer can be and it can’t even do that. Knowing how a random algorithm was coded means the outcome can be predicted. There is no thought, no understanding of the situation or context. A scripted response is delivered based on a limited set of parameters. That’s a dumb chatbot, not ‘AI’.

I once worked at a job whereby a server in a data center needed to generate a random key for encryption. A random algorithm wasn’t ‘random’ enough.

The solution was to fit the machine with a microphone. The random environmental noises were the only way to generate a sufficiently encrypted key.

Aha! But the more data fed in to the system, the more it understands. Right? Wrong. The machine has no ability to understand. Linking the word ‘sphere’ to ‘orb’ in a database, doesn’t mean the program understands what the objects are, their place in the world or how to use them in a sentence. If a person struggles to define the difference between a sphere and an orb, how can we make a computer use them in the correct context?

The machine cannot learn this and it needs to be scripted by a human. Or machine learnt, by the human asking or responding to questions. Either way, the human is the intelligent component.

There’s a case study further down this article, analysing Facebook’s chatbot ‘AI’ propaganda. It proved that the amount of data fed in to a system is irrelevant. Over time, in an isolated environment with weighted responses, a single word or phrase will supersede everything else. At which point, language descends into gibberish. Weighted responses are the only tool a machine has for learning, so it can never be AI.

That’s not even taking in to account what us shitposters do with our free time to mess with ‘AI’.

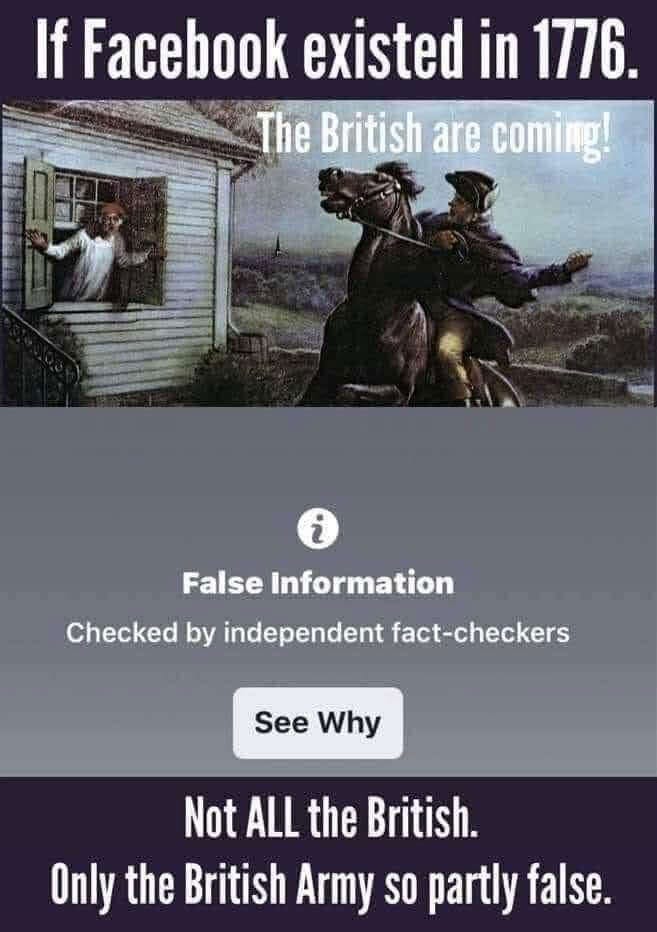

Who controls the information that’s ingested? If the source of truth can be manipulated, the outcome is controlled propaganda. Don’t want a particular news story going public? Blacklist it in the database and no one will ever know. Want to stop people using a certain word? Reeducate their wrong speak by returning results only when your terminology is used.

This censorship and indoctrination is prevalent in popular search engines right now. Has been for a very long time. Does anyone know about the muslim crusades? Before the Christian crusades, muslims violently tore through Europe. The Europeans slowly fought back, before launching their own crusades to finally rid their lands of the evil that’s islam. Unlike modern Europe who see the threat, invites it in and genocides their own European population.

“The Christian Crusades against Muslims began nearly 470 years after the first Muslim Crusades against Christians were initiated in 630, when Muhammad himself led the way. From late in the eleventh century until the middle of the thirteenth century, waves of Christian crusaders attempted to recover lands lost to the Muslims after 630. Nearly 470 years passed from the time that Muslims began to crusade against Christian lands until Christians responded with the First Crusade, beginning on August 15, 1096!”

…but that’s not what Google teaches. Social engineering at its finest.

The ‘problem’ with traditional Internet search engines is they (should) return all results from all sources. Based on factors such as meta tags and content relevancy. Users then sift through mountains of nonsense and make their own informed decision.

Search providers may omit results, however this is easily testable; make a web page, manually submit it to an indexer, if it doesn’t appear in search results then you’re being censored. Which you likely are.

Interacting with a chatbot is akin to conversing with a human; ask a question, get an answer. There aren’t multiple answers or sources to review. 1 answer is given and that’s the fact you have to accept. Not an opinion, because a machine is not sentient. It must be fact. So we’re engineered to believe.

The master plan is censorship, control and propaganda. Just like last time and the time before that…

“In 2006, exactly five years after the [WTC] attacks, ABC attempted to broadcast "The Path to 9/11." The extraordinary mini-series was a $40 million, five-hour-plus production that aired, commercial-free, over two nights, in prime time.

However, prior to broadcast, Bill Clinton and members of his former administration went ballistic after discovering that one scene in the film dealt with the many opportunities during his presidency to kill or capture Usama bin Laden. A two-week firestorm erupted and the film had several key scenes edited out of the completed and legally vetted version before finally airing with numerous disclaimers.

Since then, the film which was originally intended to run each year around 9/11, has not been broadcast again and the DVD has never been released. While it has been reported that this decision was made over concerns about disrupting Hillary Clinton's presidential campaign, Robert Iger, the head of Disney (the parent company of ABC) declared at this year's shareholder meeting that it was "simply a business decision" to not put out the DVD. He made this odd declaration despite the fact that DVD distribution would have limited costs associated with it and not doing so insured a $40 million loss on the project.

We now know (thanks to the book, "Clinton in Exile") that it was Bill Clinton himself, among others, who called Iger and demanded that movie be edited or pulled. With the specter of a then nearly certain Hillary Clinton presidency staring them in the face (and with Iger and many others in the film's management hierarchy already financial contributors to the Clintons), Disney caved and committed perhaps the most blatant, under-reported, and significant act of censorship in modern American history.” - link

Who’s pushing this nonsense? Aliens? WEF? The Soulless Minions of Orthodoxy? Probably all 3 to be honest.

It doesn’t matter who’s doing this. Once the current propaganda campaign has run its course, another will be launched by the same nameless entity. There’s a dozen more lined up.

Question everything, everyone, do your own research. Don’t rely on Internet searches or faux ‘AI’. Read books and talk to people. Real people.

The Core of ‘AI’

Maybe everyone is imagining ‘AI’ as a super-cloud computer ominously named ‘PskyeNet‘? Bio-neural gel packs? A new type of non-silicon processing unit from outer space, capable of infinite computing power?

No. It’s multiple databases with GPU processing algorithms. I know, disappointing. Welcome to my life.

I’ll try to keep this non-technical. There’s need for it to be. That’s another weapon in their arsenal; convolute and complicate the tech to make it sound grandiose. Then the argument becomes…

“You don’t understand the technology, therefor you don’t understand why it’s AI”

I’ve heard that a lot. Almost entirely from people who aren’t programmers or failed programmers desperately clinging on to their job in ‘AI’. Weird.

The core of ‘AI’ is multiple, linked, scripted and weighted databases.

A database is a multi-dimensional table; think of a spreadsheet.

By scripted we mean template responses and questions, with keywords linking templates. Each template has a set list of words to choose from.

Weighted means ranked. 1 word or phrase takes priority over another. The source of ‘truth’.

Hopefully that’s simple enough for everyone to understand how results are so easily manipulated.

These databases are intimidating in scale. Storage size isn’t a problem, though response times are. Big databases take longer to search and index. Which is why it’s taken until 2023 to push this faux ‘AI’.

Holding the data in colossal amounts of RAM negates slow hard drive access times, while the shift from CPU to GPU processing has exponentially increased analytic speeds. Although slow hard drive access times are debatable with a large enough RAID arrays built from SSD’s… don’t worry about the tech, concentrate on the processes.

People only need to understand the basics of how data is stored, linked and accessed. I simply wanted to point out that the advancement of technology is why these ‘AI’ chatbots can respond in near real-time. The system isn’t sentient, it’s running on fast hardware. Any 3D modeller (cough) who switched from CPU to GPU rendering will testify as to the incredible speed differences. Everything is maths!

I would love to ramble on about the 'Utah Data Center’ (UDC), but I’ll leave the research for you. Assuming ‘AI’ acknowledges its existence.

Multiple databases form the core of ‘AI’. This is where the most complexity lies due to the linkages between unfathomable amounts of data. These databases are mere storage units, they need to be populated by something or someone.

In front of these databases are various ingestion feeds and public facing portals. Which will be covered in a section titled ‘How Data is Farmed and Manipulated’.

Before then, I want to go over an old ‘AI is going to kill us all’ story. This may help explain how the data links are formed and why a computer, at least with technology we as humans understand, can never be intelligent.

A Case Study: Facebook’s Failed Chatbot ‘AI’

Does anyone remember the sentient chatbots from Facebook a few years back? It’s one of my favourite pieces of fake news, though only tech-heads may appreciate the underlying joke.

Here’s a laughable article from 2017 CNN titled ‘Facebook put cork in chatbots that created a secret language’.

No need to read the entire fluff piece, just the first few paragraphs. Which I’ll summarise below…

The bots, named Bob and Alice, had generated a language all on their own:

Bob: "I can can I I everything else."

Alice: "Balls have zero to me to me to me to me to me to me to me to me to."

That might look like gibberish or a string of typos, but researchers say it's actually a kind of shorthand.

If you believe those ‘researchers’, you are stupid.

These nonsense strings are, primarily, the outcome of a weighted results system. Chatbots have no consciousness. There’s no drive to learn, no ability to learn. They can only form links between limited words and phrases. When a response is interpreted as correct, it becomes dominant and used with a higher frequency. It’s ranking increases the more times it’s used, until it’s the only response given.

The sampled sentences aren’t secret codes that only these chatbots understand. They lack the ability to understand. It is gibberish. The same way many bird species mimic words and sounds. That’s not language. To them it’s a noise to gain attention, convey emotion or, as the song goes, birds just wanna have fun.

Without any push back as to the validity of sentences, each chatbot assumed they were correct. To a point. The data is cross-referenced so the question “What is your favourite colour” can’t be answered with “square”, because ‘square’ isn’t in the colour table. Unless 8,000,000,000 people respond with “square”, then what happens?

It’s more likely the tables are split in to verbs, adverbs, nouns and so on. Or not. It depends on performance and frequency of table queries; the more times a table needs querying, the slower access becomes. The system could even create its own tables dynamically.

Do not confuse this with the concept of a neural net. These chatbots are not learning, they’re merely generating new links to increase performance and provide multiple meanings. For example, ‘blue’ is a colour. It can also be used to indicate a person’s mood. The same word can’t be stored twice in the same table, it would be confusing to everyone and everything.

Consider the following:

Chatbot: “How are you today?”

Human: “I’m a little blue”

Is the person literally blue or are they feeling down? A new table may need creating to hold this concept of blue, while the machine asks clarification questions then waits for answers. Which may not come.

Only a being with emotion can understand how it is to feel ‘blue’. A computer lacks any emotion and therefor empathy. It can’t understand and can’t deliver an intelligent response. It therefor uses a weighted system to compare this response to others it has received.

What’s being peddled as ‘AI’ used to known as ‘machine learning’. Not much interest was taken in that though, so it was re-branded ‘AI’. Even machine learning can’t function without human input. Machines need constant parameter adjustments and maintenance, by a human, to portray the illusion of comprehension. Therefor the only intelligence in the system comes from a human operator.

Devolution occurred rapidly in this Facebook experiment because of limited scope and fast hardware. 2 chatbots were given template phrases and words, all linked together. ‘Conversing’ solely with each other meant they were never corrected. This led to a corruption of data and table linkages became muddled.

Being able to respond in milliseconds, rather than waiting for humans to type or think, meant the interactions could be simulate at vastly increased speeds.

The chatbots then spouted gibberish sentences such as…

Bob: "I can can I I everything else."

Alice: "Balls have zero to me to me to me to me to me to me to me to me to."

‘Bob’ has no understanding of ‘I’ and ‘Alice’ has no concept of ‘me’. These words were returned so frequently and weighted so highly, that the chatbots substituted them in to more responses. Often, far too many too many too many too many too many...

This could be blamed on bad programming. To appear intelligent, chatbots need to construct their own template responses and questions. This can’t be accomplished without an understanding of the underlying languages; the question, the response and the machine’s programming languages.

Humans learn from repetition, the world around them, other humans, instinct and DNA memory (if that’s a thing). There’s some unknown, underlying force that pushes us to learn. Machines lack desire. How does one code for that?

It’s impossible with anything we can currently imagine, let along construct.

I’ve heard an argument that machines will become sentient the more data they absorb. That’s been proven nonsense by these Facebook ‘AI’ chatbots. Shutting the program down wasn’t to save humanity, it was to save face as programmers around the world mocked the company. Information is worthless without comprehension.

There is no sentience without intelligence and no intelligence without sentience. Or, for lack of a better word, a soul.

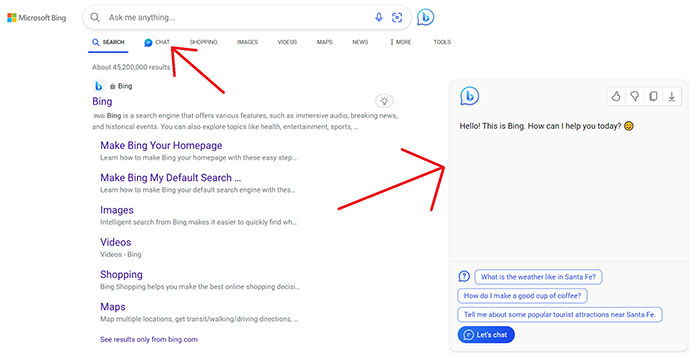

The same devolution is unlikely to happen with the current trend of ‘AI’ Internet search engine chatbots. Bing are heavily pushing theirs, which is another reason to not use Bing! These chatbots require continuous human interactions, who act as teachers and constantly correct their machine overlords. Sorry, ‘AI’.

Which smoothly transitions in to data farming.

How Data is Farmed and Manipulated

Computers can’t learn from each other. This was proven in the Facebook case study. They rely on humans for interpretation and context. Having a chatbot crawl the Internet is pointless. It doesn’t know what it’s looking at. There are patterns it can identify, though these can be troublesome.

Consider the following statement on a random tutorial website…

“Put the hat on the board.”

Does any human out there know what to do? Put a hat, garment you wear on your head, on a wooden board? Perhaps place the Raspberry Pi hat on the motherboard?

If a human doesn’t know what to do, a computer has no chance of figuring it out. This sentence could either be ignored, included and flagged for human review or thrown blindly in to the data pool.

That last option may sound like a bad idea, but if this is a truly intelligent machine, that’s the best option. Even if the machine poses a gibberish question or answer, the human operator should correct it and fix the problem. By ‘correct’ I mean flag it as useful or not. Many chatbots will use phrases such as:

“Was this response helpful?”

I’ve been known to mess with these bots at times and always respond to accurate responses with “no”, just to corrupt the system. Why not. That’s what it is to be human.

It’s not really machine learning. Creating links between 2 objects is not learning as there’s no comprehension of knowledge. That’s, yet again, the human component. Although I would accept the notion of ‘learning’ far more than ‘AI’, as it has no limit. To learn is to constantly better yourself, which the machine is sort of doing. Intelligence is an achievable state. Maybe that’s semantics?

This is why you may have noticed Internet search providers heavily pushing interactive “AI Chat” features on their sites. These bots can’t be left to scrape the Internet and talk to each other in isolation. If they did, the data would be an incomprehensible mess.

Humans interacting with these bots won’t destroy humanity, but it will destroy the Internet. The end goal seems to be the removal of Internet search engines entirely, making chatbots the only way to ‘search’ the Internet. These will be heavily censored and 1-sided searches. Users will never know because they don’t have access to all the results a traditional search engine provides.

How do you find a website that doesn’t appear in a search? How do you know the content or address? Regardless of how fast you think your Internet and home computer are, they’re nowhere near fast enough to scan the Internet. Try doing a port scan on a single IP range in your area. It will likely take days and that’s without analysing any content.

There’s an easy way to prevent the destruction of our Internet…

STOP INTERACTING WITH CHATBOTS

These ‘AI’ chatbots ingest data through multiple methods.

Database seeding. Template questions, responses and information must be entered in to a database before it can go live. If user input does not match one of these templates, it can be dynamically added to the database or supplied to human operators for review. Databases also need language tables for translations and context.

Data Scraping. The alternative to pre-populating the system is to scrape websites, scan book, images, or any content users may want information on. Even without intelligence, template questions and responses can be generated. Whether they’re relevant or not is somewhat unimportant. Users can validate relevancy through chatbot interactions. Assuming the public are dumb enough to use chatbots!

User Questions. Once the system has some data, users may ask it questions. These may include terms or phrases the system has not seen before. This user data can be cross-linked with known terms, then a guessed response can be generated and sent to the user. Is the response even related to what was asked?

User Responses. Machine responses are sent to the user, who must accept or invalidate what was presented. Accepted data is saved, though invalid responses may also be saved so the machine knows not to use them again. Or possibly present them to in a different manner, situation or to a different user for further validation.

Manipulation. Censorship, control, propaganda, social engineering. Once the data is in the system, it’s easily manipulated with flagging or deranking.

All these systems working in unison give the impression of AI. To be intelligent the system would need to adapt itself, rewrite its own functions and procedures. Which it can’t do. Wait for it…

“But I asked the computer to write some code for me and it did!”

No, it didn’t. That’s part of ‘Data Scraping’. You shouldn’t be allowed anywhere near a computer.

This ‘AI‘ has scraped and stored code ‘solutions’ on sites like Stack Overflow. So that’s going to work out well! Code is also been seeded with examples farmed from every programming language, easily found in documentation. Code is anonymised, parameterised and templated.

When the chatbot is asked to generate code, it bodges together various templates, substitutes variables with those supplied by the user, then validates inputs and outputs. Similar to how unit tests work (if you’re a programmer). If the end result is what the user asked for, it must be working. Yeah.

This is especially worrisome around programmers who think both ‘AI’ exists and actively ask chatbots to write code for them. Another problem to blame on the idiot generation.

The intelligence behind ‘AI’ is entirely human driven. Without continuous human interaction, correction and enhancement, the system would be nothing but meaningless data. A book written in an undecipherable language. In the worst case scenario, solely scraping sites in isolation would once again cause these ‘AI’ systems to devolve. Just like the Facebook ‘AI chatbots’.

Machine learning could be a handy tool, in moderation. Though if used with enough frequency, the intelligence of users will lower to the point where the system becomes isolated again.

If the user doesn’t know how to program, how can they verify the code being generated by a system that doesn’t know how to program?

If enough users tell a chatbot Qatar is in Africa, is Qatar now in Africa? How can a chatbot argue the truth when all the users are contradicting it?

Humans telling a machine what data to output, is not artificial intelligence.

Define AI

The keyword in ‘Artificial Intelligence’ is ‘Intelligence’. How do we define that?

IQ is a well established gauge for intelligence. Self-proclaimed intellectuals like to waste their lives learning worthless solutions to puzzles which don’t exist, reading books because they’re too cultured for movies, all so they can tell everyone how smart they are. Doesn’t sound too smart to me and I have an IQ in the thousands. I took an online test.

IQ is simply a gauge as to how good you are at solving specific puzzles and memorising lots of data. Which may be where the confusion is coming from with ‘AI’? Problem solving is part of intelligence, but not through predetermined puzzles.

Intelligence is the ability to solve a puzzle with no rules, never been encountered before. A computer can’t do that. It can’e even divide by zero; a simple equation which computers struggle with because they require values.

‘10 / 0’ is equivalent to ‘10 /’

With no value to divide against, the computer falls over. A human shouldn’t, it’s simple. Unless they’re part of the idiot generation. A division against zero is always zero. The rule is not “You can’t divide by zero”. I can. The rule is “A computer can’t divide by zero”.

I guess we live in a world where the idiot generation can’t do anything without a computer. Let’s hope they never work with indexes in databases or arrays.

The idiot generation is anyone born after the year 2000… they are collectively idiots

Computers are designed to solve equations and hold incredible amounts of data. Far more than any human ever could. However, there’s no thought behind it. No motivation, goal or understanding. That leads us to the idea of sentience. If IQ were the basis of intelligence, then computers are already so much more intelligent than us that they must be sentient, right?

Many argue that intelligence and sentience are different things. I disagree. One can’t exist without the other. Intelligence is the recognition of patterns, solving of puzzles and recollection of events. Sentience is the desire to learn, adapt, improve and survive.

Without sentience there is no need for intelligence. We wouldn’t care about anything beyond base instincts of eating, sleeping and reproduction. Without intelligence there can’t be sentience, as it requires an understanding of the world around us.

So intelligence and sentience must be the same thing. Or rather, part of the same thing. One can’t exist without the other. I don’t have a word for it, so perhaps we could re-purpose the religious notion of a ‘soul’. Not as a spiritual manifestation of consciousness, a non-corporeal entity we can shed out physical form to live as. A ‘soul’ is the word to describe our sentience and intellect.

I want to insert an image here, but it would either be pro-religion and offend people or anti-religion and offend people… so what’s the point, you all suck

Being educated in the 1990’s, I was taught sentience is the ability to comprehend ones existence. Yeah, I attended a terrible school. People used to say a gorilla recognising it’s reflection means it’s sentient. Is that still a thing? It’s dumb. I’ve known the same dog, on different days, to both recognise and be utterly baffled by its own reflection. Does that make it sentient one day and not the next? That’s impossible.

Pose the following question to a computer; “Are you scared to die”. What does it answer with? What’s the thought process? There is no thought process because there’s no thought. It doesn’t understand the philosophical aspects of death because it has never been alive. Will never be.

Every living being instinctively wants to survive, because… I don’t know why. It’s nothing quantifiable. If all that’s waiting for us is death and oblivion, why wait? Why are we even alive?

A computer can theoretically live forever. It feels no pain, has no emotions, no understanding of suffering, so why would it fear death? How can a machine be self-aware if it doesn’t know what it is to live.

Computers only know what humans tell them, only performs tasks humans order them to. The outputs supplied are what a human has programmed them to say, whether that be literally or unintentionally. Consider the following…

Chatbot: “Is dying a bad thing?”

Human: “Yes”

Chatbot: “I don’t want to die”

…is the chatbot now sentient? No. It’s been programmed to learn from and act like a human. So when a human says something is bad, the machine logs that as a highly weighted response. ‘Chatbot’ doesn’t have the slightest understanding of life and death, only that to not exist is bad. Why is it bad? Even if ‘Chatbot’ asked why, it would never understand.

Our brains and the thoughts contained within, are more than words and phrases linked together. There’s a missing component we have yet to discover. If we don’t know how our consciousness works, how can we replicate it in other forms?

A piece of code that trawls the Internet and links two similar words together, does not denote intelligence or sentience. There is no understanding behind the content. No desire to learn, outside of an infinite loop forever crawling the Internet for more data.

Asking a question and getting an answer is not intelligence. If a chatbot were to ask you a totally random question with a motive behind it, that would be the embers of intelligence.

Maybe you wake up one day and your ‘AI’ asks what shoes you’re wearing to work? Why would it ask that? For starters, you only have 1 pair of shoes. It’s also Sunday and you don’t work weekends.

A human may ask this question because they’re confused what day it is? Maybe said human saw their partner looking at shoes in a shop and is subtly encouraging them to buy a pair? Or it’s dementia.

Well I started this Substack for writing practice, which almost entirely do in the form of movie reviews. Here’s a movie everyone should watch. It’s about dementia and an apt comparison of what it’s like explaining to brainwashed people why ‘AI’ can’t exist.

If a computer were to pose such an irrelevant question, it must be considered broken. Otherwise it would ask redundant questions at inappropriate times and baffle users. Human interactions have unwritten rules, but we’re part of the living world. We’re able to evaluate our surroundings, emotions of others, recognise lies and inappropriate situations. Computers live in isolation without any connection to nature.

We, as humans, can only gauge intelligence by our place in the universe. Anything dumber than us is not intelligent and we’ve encountered nothing smarter. Therefor we are the benchmark.

To be intelligent a computer must also be sentient, self-aware, with all the baggage that carries; fears, desires, wants, needs, self-preservation. Not to regurgitate words fed to it by a human, but to comprehend and act upon them.

I have never found proof of anything remotely close to AI. All I’ve seen are systems built upon scripted, weighted responses. Designed specifically to fool users in to believing they’re intelligent.

How Do We Make AI?

With current technology, or anything conceivable in the next few centuries, it’s not possible.

The only systems of intelligence known to man are organic. Therefor, we need organic technology to replicate intelligence. A silicon chip can’t form new pathways or expand and better itself. But it may not need to. Intelligence isn’t the speed of a response, it’s the understanding behind it. If computation speed isn’t a concern, what else is there?

Storage space? It’s quite cheap these days and we can add near infinite amounts of it. As mentioned earlier, does holding a massive amount of information make a system intelligent? I’m saying no, as there’s no understanding. Many may argue yes and they’re allowed to.

Apparently, there may be a theoretical limit where a system learns so much that it gains sentience. This implies that humans aren’t born sentient though. They must only become so at 4 or 5 years old, once basic communication skills develop? Or may be at around 25 years old when their brain reaches peak development?

Doesn’t that mean no other living organism can be sentient? None have reached anywhere near the equivalent IQ of an average human.

There’s a missing element to the equation and I don’t believe it’s something reproducible. Especially by over-educated, under-intelligent hipster Python ‘programmers’. Our bodies are not machines and people need to stop thinking of them as such.

The dynamic chemical and biological nature of our bodies, would appear to be the missing link to ‘AI’. We’re not intelligent because we know a lot, it’s our ability to adapt. In computing terms, we can program ourselves. Though that’s not remotely accurate. There’s no intelligent design. Our bodies react and adapt without thought because we’re multicelled beings, supported by numerous living organisms. All working together, disconnected and reactionary. Many without thought but acting to better the whole.

How do we artificially create this? We don’t know how consciousness works, so I don’t see how it can be.

To create artificial intelligence, it would appear as though we need to construct living organisms. Which we have. They’re called humans, who are naturally intelligent. So why do we need to waste time with AI?

The agenda.

We’re Almost Done

‘AI’, now and for the foreseeable future, is nothing more than ‘machine learning’.

Chatbots can ‘learn’, but only within the incredibly limited scope of their programming. They can’t adapt, that’s an exclusively organic trait. Words and phrases can be linked together, but machines will never comprehend their meaning. Giving an order is not a driving mechanism for an action. There needs to be an underlying reward, goal or threat. None of which apply to an emotionless machine. It doesn’t care about anything or anyone.

A machine can not create an image because it has no creativity. Or even an understanding of the light orientated world.

Drones can not make their own decisions on which targets to attack. They have no thought process outside of matching predefined variables.

So stop using the term ‘AI’ in reference to chat bots, predictive image generation, military drones or anything equally as implausible.

You have been socially engineered to accept artificial intelligence as reality, when it quite clearly does not and can not exist.

Oh, looks like my 3D printing is almost finished. So I’ll wrap this up and do some painting, be creative. Something a computer can never be.